library(RNetCDF) # to access server data files via OPeNDAP

library(readr) # to efficiently read in data

library(janitor) # to create consistent, 'clean' variable names

library(tidyverse) # for data manipulation and plotting with ggplot2

library(lubridate) # for working with date and time variables

library(leaflet) # to create an interactive map of the tracking locations

library(knitr); library(kableExtra) # for better table printingAccess eReefs data

Programmatic server access

Learn how to extract eReefs data from the AIMS eReefs THREDDS server for multiple dates and points with OPeNDAP in .

This tutorial builds on the techniques introduced in Access eReefs data: Basic server access .

In this tutorial we will look at how to get eReefs data from the AIMS eReefs THREDDS server corresponding to the logged locations of tagged marine animals. Keep in mind, however, that the same methodology can be applied in any situation where we wish to extract eReefs data for a range of points with different dates of interest for each point.

Preparation

Create a folder named data.

Download the satellite tracking data file into your data folder.

R packages

Motivating problem

The tracking of marine animals is commonly used by researchers to gain insights into the distribution, biology, behaviour and ecology of different species. However, knowing where an animal was at a certain point in time is only one piece of the puzzle. To start to understand why an animal was where it was, we usually require information on things like: What type of habitat is present at the location? What were the environmental conditions like at the time? What other lifeforms were present at the tracked location (e.g. for food or mating)?

In this tutorial we will pretend that we have tracking data for Loggerhead Sea Turtles and wish to get eReefs data corresponding to the tracked points (in time and space) to understand more about the likely environmental conditions experienced by our turtles.

Example tracking data

We will use satellite tracking data for Loggerhead Sea Turtles (Caretta caretta) provided in Strydom (2022). This data contains tracking detections which span the length of the Great Barrier Reef off the east coast of Queensland Australia from December 2021 to April 2022 (shown in Figure 1).

Download the satellite tracking data file into your data folder.

This dataset is a summarised representation of the tracking locations per 1-degree cell. This implies a coordinate uncertainty of roughly 110 km. This level of uncertainty renders the data virtually useless for most practical applications, though it will suffice for the purposes of this tutorial. Records which are landbased as a result of the uncertainty have been removed and from here on in we will just pretend that the coordinates are accurate.

# Read in data

loggerhead_data <- read_csv("data/Strydom_2022_DOI10-15468-k4s6ap.csv") |>

clean_names() |> # clean up variable names

rename( # rename variables for easier use

record_id = gbif_id,

latitude = decimal_latitude,

longitude = decimal_longitude,

date_time = event_date

)

# Remove land based records (as a result of coordinate uncertainty)

land_based_records <- c(4022992331, 4022992326, 4022992312, 4022992315, 4022992322, 4022992306)

loggerhead_data <- loggerhead_data |>

dplyr::filter(!(record_id %in% land_based_records))

# Select the variables relevant to this tutorial

loggerhead_data <- loggerhead_data |>

select(longitude, latitude, date_time, record_id, species)

# View the tracking locations on an interactive map

loggerhead_data |>

leaflet() |>

addTiles() |>

addMarkers(label = ~date_time)Extracting data from the server

We will extend the basic methods introduced in the preceding tutorial Accessing eReefs data from the AIMS eReefs THREDDS server to extract data for a set of points and dates.

We will extract the eReefs daily mean temperature (temp), salinity (salt), and east- and northward current velocities (u and v) corresponding to the coordinates and dates for the tracking detections shown in Table 1.

Show code to produce table

# Print table of tracking detections (Strydom, 2022)

loggerhead_data |>

arrange(date_time) |>

mutate(date = format(date_time, "%Y-%m-%d"), time = format(date_time, "%H:%M")) |>

select(date, time, longitude, latitude) |>

kable() |> kable_styling() |> scroll_box(height = "300px", fixed_thead = TRUE)| date | time | longitude | latitude |

|---|---|---|---|

| 2021-12-21 | 17:57 | 152.5 | -24.5 |

| 2022-01-02 | 21:49 | 153.5 | -25.5 |

| 2022-01-05 | 07:33 | 152.5 | -23.5 |

| 2022-01-06 | 05:03 | 151.5 | -23.5 |

| 2022-01-09 | 20:25 | 151.5 | -22.5 |

| 2022-01-13 | 06:28 | 151.5 | -21.5 |

| 2022-01-14 | 18:26 | 150.5 | -21.5 |

| 2022-01-17 | 17:06 | 150.5 | -20.5 |

| 2022-01-19 | 17:44 | 149.5 | -20.5 |

| 2022-01-21 | 07:22 | 149.5 | -19.5 |

| 2022-01-23 | 07:02 | 148.5 | -19.5 |

| 2022-01-27 | 17:00 | 147.5 | -18.5 |

| 2022-01-30 | 17:02 | 146.5 | -18.5 |

| 2022-02-02 | 09:14 | 146.5 | -17.5 |

| 2022-02-03 | 21:37 | 153.5 | -24.5 |

| 2022-02-06 | 18:25 | 146.5 | -16.5 |

| 2022-02-07 | 07:15 | 145.5 | -16.5 |

| 2022-02-09 | 18:33 | 145.5 | -15.5 |

| 2022-02-12 | 08:59 | 153.5 | -26.5 |

| 2022-02-12 | 10:34 | 145.5 | -14.5 |

| 2022-03-25 | 07:10 | 144.5 | -13.5 |

| 2022-04-01 | 18:41 | 143.5 | -12.5 |

| 2022-04-09 | 22:00 | 143.5 | -11.5 |

| 2022-04-14 | 06:31 | 143.5 | -10.5 |

| 2022-04-21 | 10:30 | 143.5 | -9.5 |

We will take advantage of the consistent file naming on the server to extract the data of interest programmatically. We will first need to copy the OPeNDAP data link for one of the files within the correct model and aggregation folders and then replace the date.

Selecting a random date within the daily aggregated data with one data file per day (daily-daily) for the 1km hydro model (gbr1_2.0), we see the files have the naming format:

https://thredds.ereefs.aims.gov.au/thredds/dodsC/ereefs/gbr1_2.0/daily-daily/EREEFS_AIMS-CSIRO_gbr1_2.0_hydro_daily-daily-YYYY-MM-DD.nc

We will now write a script which extracts the data for the dates and coordinates in Table 1. For each unique date we will open the corresponding file on the server and extract the daily mean temperature, salinity, northward and southward current velocities for each set of coordinates corresponding to the date.

# GET DATA FOR EACH DATE AND COORDINATE (LAT LON) PAIR

t_start = Sys.time() # to track run time of extraction

## 1. Setup variables for data extraction

# Server file name = <file_prefix><date (yyyy-mm-dd)><file_suffix>

file_prefix <- "https://thredds.ereefs.aims.gov.au/thredds/dodsC/ereefs/gbr1_2.0/daily-daily/EREEFS_AIMS-CSIRO_gbr1_2.0_hydro_daily-daily-"

file_suffix <- ".nc"

# Table of dates and coordinates for which to extract data (dates as character string)

detections <- loggerhead_data |>

mutate(date = as.character(as_date(date_time))) |>

select(date, longitude, latitude) |>

distinct()

extracted_data <- data.frame() # to save the extracted data

dates <- unique(detections$date) # unique dates for which to open server files

## 2. For each date of interest, open a connection to the corresponding data file on the server

for (i in 1:length(dates)) {

date_i <- dates[i]

# Open file

file_name_i <- paste0(file_prefix, date_i, file_suffix)

server_file_i <- open.nc(file_name_i)

# Coordinates for which to extract data for the current date

coordinates_i <- detections |> dplyr::filter(date == date_i)

# Get all coordinates in the open file (each representing the center-point of the corresponding grid cell)

server_lons_i <- var.get.nc(server_file_i, "longitude")

server_lats_i <- var.get.nc(server_file_i, "latitude")

## 3. For each coordinate (lon, lat) for the current date, get the data for the closest grid cell (1km^2) from the open server file

for (j in 1:nrow(coordinates_i)) {

# Current coordinate of interest

lon_j <- coordinates_i[j,]$longitude

lat_j <- coordinates_i[j,]$latitude

# Find the index of the grid cell containing our coordinate of interest (i.e. the center-point closest to our point of interest)

lon_index <- which.min(abs(server_lons_i - lon_j))

lat_index <- which.min(abs(server_lats_i - lat_j))

# Setup start vector arguments for RNetCDF::var.get.nc (same for temp, salt, currents u & v)

###################################

# Recall the order of the dimensions (longitude, latitude, k, time) from the previous tutorial. Therefore we want [lon_index, lat_index, k = 16 corresponding to a depth of 0.5m, time = 1 (as we're using the daily files this is the only option)]. If you are still confused go back to the previous tutorial or have a look at the structure of one of the server files by uncommenting the following 5 lines of code:

# not_yet_run = TRUE # used so the following lines are only run once

# if (not_yet_run) {

# print.nc(server_file_i)

# not_yet_run = FALSE

# }

##################################

start_j <- c(lon_index, lat_index, 16, 1) # k = 16 corresponds to depth = 0.5m

count_j <- c(1, 1, 1, 1) # only extracting a single value for each variable

# Get the data for the grid cell containing our point of interest

temp_j <- var.get.nc(server_file_i, "temp", start_j, count_j)

salt_j <- var.get.nc(server_file_i, "salt", start_j, count_j)

u_j <- var.get.nc(server_file_i, "u", start_j, count_j)

v_j <- var.get.nc(server_file_i, "v", start_j, count_j)

extracted_data_j <- data.frame(date_i, lon_j, lat_j, temp_j, salt_j, u_j, v_j)

## 4. Save data in memory and repeat for next date-coordinate pair

extracted_data <- rbind(extracted_data, extracted_data_j)

}

# Close connection to open server file and move to the next date

close.nc(server_file_i)

}

# Calculate the run time of the extraction

t_stop <- Sys.time()

extract_time <- t_stop - t_start

# Rename the extracted data columns

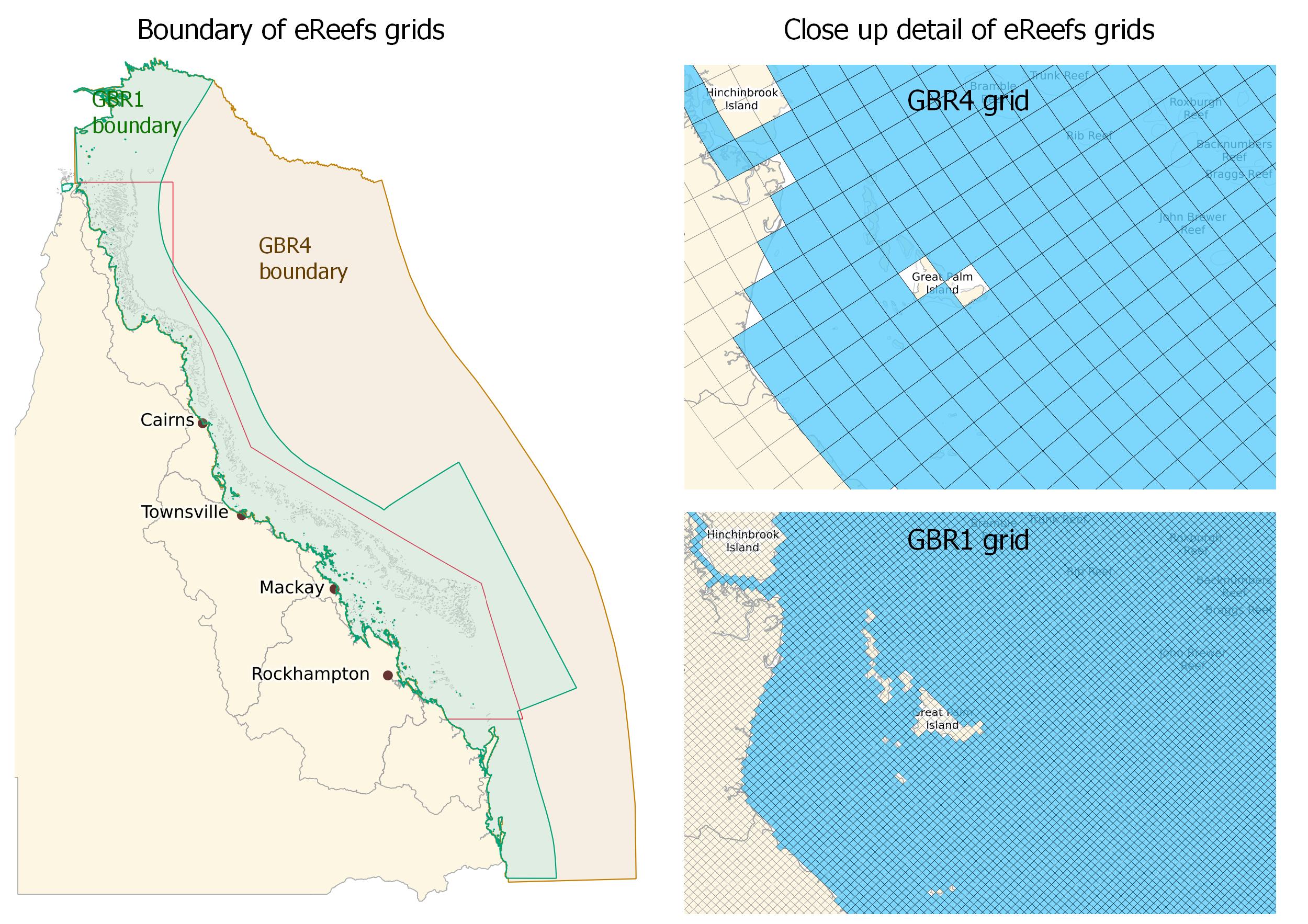

colnames(extracted_data) <- c("date", "lon", "lat", "temp", "salt", "u", "v")In the code above we match the closest eReefs model grid cell to each point in our list of coordinates (i.e. for each tracking detection). This will therefore match grid cells to all the coordinates, even if they are not within the eReefs model boundary. This behaviour may be useful when we have points right along the coastline as the eReefs models have small gaps at many points along the coast (see image below). However, in other cases this behaviour may not be desirable. For example, if we had points down near Sydney they would be matched to the closest eReefs grid cells (somewhere up near Brisbane) and the extracted data would be erroneous.

Our extracted data is shown below in Table 2. To get this data we opened 24 files on the server (corresponding to unique dates in Table 1) and extracted data for 25 unique date-coordinate pairs. On our machine this took 1.2 mins to run.

# Print the extracted data

extracted_data |> kable() |> kable_styling() |> scroll_box(height = "300px", fixed_thead = TRUE)| date | lon | lat | temp | salt | u | v |

|---|---|---|---|---|---|---|

| 2022-01-17 | 150.5 | -20.5 | 29.39174 | 35.33709 | -0.0659649 | -0.1778789 |

| 2022-01-02 | 153.5 | -25.5 | 26.00014 | 35.29583 | -0.0353987 | 0.0375804 |

| 2021-12-21 | 152.5 | -24.5 | 28.09089 | 35.25638 | 0.0713334 | -0.0199901 |

| 2022-01-27 | 147.5 | -18.5 | 29.46284 | 33.98060 | 0.2652802 | 0.0044857 |

| 2022-02-12 | 145.5 | -14.5 | 29.89639 | 34.71634 | -0.0812021 | 0.0344770 |

| 2022-02-12 | 153.5 | -26.5 | 26.79947 | 35.38062 | -0.1235716 | 0.0057869 |

| 2022-01-23 | 148.5 | -19.5 | 28.98567 | 35.26142 | -0.1370672 | -0.0219267 |

| 2022-04-14 | 143.5 | -10.5 | 29.47597 | 34.41768 | -0.0409690 | 0.0776469 |

| 2022-01-19 | 149.5 | -20.5 | 29.91821 | 35.47683 | 0.0181383 | -0.1044010 |

| 2022-01-09 | 151.5 | -22.5 | 29.24492 | 35.33996 | 0.0007144 | -0.1032533 |

| 2022-01-14 | 150.5 | -21.5 | 28.98728 | 35.40287 | -0.0638922 | -0.0470999 |

| 2022-04-09 | 143.5 | -11.5 | 29.93064 | 34.55591 | -0.0967079 | 0.1442562 |

| 2022-01-21 | 149.5 | -19.5 | 29.57756 | 35.11963 | -0.1611882 | -0.0347497 |

| 2022-01-30 | 146.5 | -18.5 | 30.14936 | 34.59603 | -0.1835063 | 0.1426468 |

| 2022-02-03 | 153.5 | -24.5 | 27.06327 | 35.32142 | 0.5498420 | -0.7972959 |

| 2022-02-09 | 145.5 | -15.5 | 29.54386 | 34.74210 | -0.1198241 | 0.0636992 |

| 2022-02-07 | 145.5 | -16.5 | 30.37080 | 34.06056 | -0.1037723 | 0.2000399 |

| 2022-03-25 | 144.5 | -13.5 | 29.04090 | 34.75312 | -0.4569216 | 0.3603792 |

| 2022-01-13 | 151.5 | -21.5 | 28.41784 | 35.25340 | -0.0951453 | 0.0155211 |

| 2022-04-01 | 143.5 | -12.5 | 30.14290 | 34.44403 | 0.0250692 | -0.0175750 |

| 2022-04-21 | 143.5 | -9.5 | 29.56355 | 34.19133 | 0.0587259 | 0.0340937 |

| 2022-01-05 | 152.5 | -23.5 | 25.64322 | 35.22873 | 0.0303043 | 0.0149218 |

| 2022-02-06 | 146.5 | -16.5 | 29.13773 | 34.69858 | -0.1224007 | -0.1002772 |

| 2022-01-06 | 151.5 | -23.5 | 28.12745 | 35.40966 | -0.0329330 | 0.0152895 |

| 2022-02-02 | 146.5 | -17.5 | 30.58525 | 34.71669 | 0.1280997 | -0.0543357 |

Matching extracted data to tracking data

We will match up the eReefs data with our tracking detections by combining the two datasets based on common date, longitude and latitude values.

# Ensure common variables date, lon and lat between the two datasets

extracted_data <- extracted_data |>

rename(longitude = lon, latitude = lat)

loggerhead_data <- loggerhead_data |>

mutate(date = as_date(date_time))

# Merge the two datasets based on common date, lon and lat values

combined_data <- merge(

loggerhead_data, extracted_data,

by = c("date", "longitude", "latitude")

) |> select(-date)

# Print the combined data

combined_data |> kable() |> kable_styling() |> scroll_box(height = "300px", fixed_thead = TRUE)| longitude | latitude | date_time | record_id | species | temp | salt | u | v |

|---|---|---|---|---|---|---|---|---|

| 152.5 | -24.5 | 2021-12-21 17:57:22 | 4022992328 | Caretta caretta | 28.09089 | 35.25638 | 0.0713334 | -0.0199901 |

| 153.5 | -25.5 | 2022-01-02 21:49:55 | 4022992329 | Caretta caretta | 26.00014 | 35.29583 | -0.0353987 | 0.0375804 |

| 152.5 | -23.5 | 2022-01-05 07:33:53 | 4022992304 | Caretta caretta | 25.64322 | 35.22873 | 0.0303043 | 0.0149218 |

| 151.5 | -23.5 | 2022-01-06 05:03:23 | 4022992302 | Caretta caretta | 28.12745 | 35.40966 | -0.0329330 | 0.0152895 |

| 151.5 | -22.5 | 2022-01-09 20:25:01 | 4022992319 | Caretta caretta | 29.24492 | 35.33996 | 0.0007144 | -0.1032533 |

| 151.5 | -21.5 | 2022-01-13 06:28:09 | 4022992308 | Caretta caretta | 28.41784 | 35.25340 | -0.0951453 | 0.0155211 |

| 150.5 | -21.5 | 2022-01-14 18:26:17 | 4022992318 | Caretta caretta | 28.98728 | 35.40287 | -0.0638922 | -0.0470999 |

| 150.5 | -20.5 | 2022-01-17 17:06:32 | 4022992330 | Caretta caretta | 29.39174 | 35.33709 | -0.0659649 | -0.1778789 |

| 149.5 | -20.5 | 2022-01-19 17:44:38 | 4022992320 | Caretta caretta | 29.91821 | 35.47683 | 0.0181383 | -0.1044010 |

| 149.5 | -19.5 | 2022-01-21 07:22:08 | 4022992316 | Caretta caretta | 29.57756 | 35.11963 | -0.1611882 | -0.0347497 |

| 148.5 | -19.5 | 2022-01-23 07:02:18 | 4022992323 | Caretta caretta | 28.98567 | 35.26142 | -0.1370672 | -0.0219267 |

| 147.5 | -18.5 | 2022-01-27 17:00:04 | 4022992327 | Caretta caretta | 29.46284 | 33.98060 | 0.2652802 | 0.0044857 |

| 146.5 | -18.5 | 2022-01-30 17:02:42 | 4022992314 | Caretta caretta | 30.14936 | 34.59603 | -0.1835063 | 0.1426468 |

| 146.5 | -17.5 | 2022-02-02 09:14:56 | 4022992301 | Caretta caretta | 30.58525 | 34.71669 | 0.1280997 | -0.0543357 |

| 153.5 | -24.5 | 2022-02-03 21:37:56 | 4022992313 | Caretta caretta | 27.06327 | 35.32142 | 0.5498420 | -0.7972959 |

| 146.5 | -16.5 | 2022-02-06 18:25:38 | 4022992303 | Caretta caretta | 29.13773 | 34.69858 | -0.1224007 | -0.1002772 |

| 145.5 | -16.5 | 2022-02-07 07:15:30 | 4022992310 | Caretta caretta | 30.37080 | 34.06056 | -0.1037723 | 0.2000399 |

| 145.5 | -15.5 | 2022-02-09 18:33:03 | 4022992311 | Caretta caretta | 29.54386 | 34.74210 | -0.1198241 | 0.0636992 |

| 145.5 | -14.5 | 2022-02-12 10:34:12 | 4022992325 | Caretta caretta | 29.89639 | 34.71634 | -0.0812021 | 0.0344770 |

| 153.5 | -26.5 | 2022-02-12 08:59:01 | 4022992324 | Caretta caretta | 26.79947 | 35.38062 | -0.1235716 | 0.0057869 |

| 144.5 | -13.5 | 2022-03-25 07:10:49 | 4022992309 | Caretta caretta | 29.04090 | 34.75312 | -0.4569216 | 0.3603792 |

| 143.5 | -12.5 | 2022-04-01 18:41:42 | 4022992307 | Caretta caretta | 30.14290 | 34.44403 | 0.0250692 | -0.0175750 |

| 143.5 | -11.5 | 2022-04-09 22:00:54 | 4022992317 | Caretta caretta | 29.93064 | 34.55591 | -0.0967079 | 0.1442562 |

| 143.5 | -10.5 | 2022-04-14 06:31:48 | 4022992321 | Caretta caretta | 29.47597 | 34.41768 | -0.0409690 | 0.0776469 |

| 143.5 | -9.5 | 2022-04-21 10:30:09 | 4022992305 | Caretta caretta | 29.56355 | 34.19133 | 0.0587259 | 0.0340937 |

Hooray! We now have our combined dataset of the Loggerhead Sea Turtle tracking detections and the corresponding eReefs daily aggregated data (Table 3).

Strydom A. 2022. Wreck Rock Turtle Care - satellite tracking. Data downloaded from OBIS-SEAMAP; originated from Satellite Tracking and Analysis Tool (STAT). DOI: 10.15468/k4s6ap accessed via GBIF.org on 2023-02-17.